AWS SageMaker: 7 Powerful Reasons to Use This Ultimate ML Tool

Looking to build, train, and deploy machine learning models at scale? AWS SageMaker is your ultimate answer. This fully managed service simplifies the entire ML lifecycle, making it easier than ever for developers and data scientists to bring ideas to life—fast, efficiently, and without the infrastructure headache.

What Is AWS SageMaker and Why It Matters

AWS SageMaker is a fully managed machine learning (ML) service provided by Amazon Web Services (AWS) that enables developers and data scientists to build, train, and deploy ML models quickly and efficiently. Unlike traditional ML workflows that require significant setup and maintenance, SageMaker removes the heavy lifting by offering integrated tools for every step of the machine learning process—from data preparation to model deployment.

Core Definition and Purpose

At its heart, AWS SageMaker is designed to democratize machine learning. It allows users with varying levels of expertise to create high-quality models without needing deep knowledge of underlying infrastructure. Whether you’re a beginner experimenting with your first model or a seasoned ML engineer working on complex deep learning architectures, SageMaker provides the tools and flexibility to succeed.

- Eliminates the need to manage servers or clusters manually.

- Supports popular ML frameworks like TensorFlow, PyTorch, and MXNet.

- Offers pre-built algorithms optimized for performance and scalability.

How AWS SageMaker Fits Into the Cloud Ecosystem

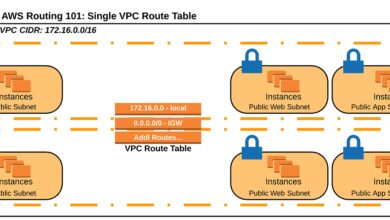

SageMaker is deeply integrated into the broader AWS ecosystem. It works seamlessly with services like Amazon S3 for data storage, IAM for security, CloudWatch for monitoring, and Lambda for serverless computing. This integration allows for end-to-end workflows where data can flow securely from storage to training to deployment, all within the AWS environment.

For example, you can store raw data in S3, use SageMaker Data Wrangler to clean and preprocess it, train a model using SageMaker Training Jobs, and then deploy the model via SageMaker Endpoints—all orchestrated through the AWS console or SDKs.

“SageMaker has transformed how we approach machine learning at scale. It’s not just about faster training—it’s about reducing time-to-insight.” — AWS Customer, Financial Services Firm

Key Features That Make AWS SageMaker Stand Out

One of the biggest reasons AWS SageMaker has gained widespread adoption is its comprehensive set of features that cover every stage of the machine learning lifecycle. From notebook environments to automatic model tuning, SageMaker offers tools that are both powerful and user-friendly.

Jupyter Notebook Integration with SageMaker Studio

SageMaker Studio is a web-based, visual IDE for machine learning that brings together all the tools you need in one place. It’s built on Jupyter notebooks but goes far beyond them by offering drag-and-drop workflows, real-time collaboration, and integrated debugging tools.

- Single pane of glass for all ML activities.

- Real-time collaboration with team members.

- Version control integration with Git.

You can launch a notebook instance in seconds, connect it directly to your S3 buckets, and start exploring data immediately. The studio environment also supports multiple users, making it ideal for teams working on shared projects.

Automatic Model Tuning (Hyperparameter Optimization)

One of the most time-consuming parts of machine learning is tuning hyperparameters. AWS SageMaker automates this process using Bayesian optimization to find the best combination of parameters for your model.

- Supports both built-in algorithms and custom models.

- Can run hundreds of training jobs in parallel.

- Tracks performance metrics and selects the optimal model.

This feature, known as SageMaker Automatic Model Tuning, significantly reduces the trial-and-error phase and improves model accuracy without requiring manual intervention.

Built-in Algorithms and Framework Support

AWS SageMaker comes with a library of built-in algorithms optimized for performance and scalability. These include linear learners, XGBoost, K-means clustering, principal component analysis (PCA), and deep learning models like image classification and object detection.

In addition, SageMaker supports popular open-source frameworks such as:

- TensorFlow

- PyTorch

- Apache MXNet

You can also bring your own custom algorithms using Docker containers, giving you full control over the training environment.

End-to-End Machine Learning Workflow with AWS SageMaker

The true power of AWS SageMaker lies in its ability to support a complete ML workflow—from data ingestion to model deployment and monitoring. This end-to-end capability makes it one of the most comprehensive platforms available today.

Data Preparation and Exploration Using SageMaker Data Wrangler

Data Wrangler is a visual tool within SageMaker that simplifies data preprocessing. It allows you to import, clean, transform, and visualize data without writing extensive code.

- Over 300 built-in transformations (e.g., normalization, encoding, imputation).

- One-click integration with S3, Redshift, and databases.

- Exportable data pipelines for reproducibility.

With Data Wrangler, you can reduce data preparation time from days to hours, enabling faster iteration and experimentation.

Model Training and Distributed Computing

SageMaker provides scalable training capabilities that can handle everything from small datasets to massive deep learning models. You can choose from a variety of instance types, including GPU-powered instances for accelerated training.

- Supports distributed training across multiple nodes.

- Enables spot instance usage to reduce costs by up to 90%.

- Integrates with SageMaker Debugger to monitor training jobs in real time.

The SageMaker Training Job feature automates the setup of training environments, pulls data from S3, runs the training script, and saves the model artifacts—all without manual intervention.

Model Deployment and Real-Time Inference

Once a model is trained, SageMaker makes deployment simple. You can deploy models as real-time endpoints, batch transform jobs, or serverless inference endpoints.

- Real-time endpoints provide low-latency predictions (ideal for web apps).

- Batch transform processes large volumes of data asynchronously.

- Serverless Inference (launched in 2022) automatically scales and charges only for usage.

These deployment options ensure that your model can serve predictions efficiently, whether you’re handling thousands of requests per second or processing nightly reports.

Advanced Capabilities: SageMaker Pipelines and MLOps

As machine learning becomes more central to business operations, the need for reproducible, auditable, and automated workflows grows. AWS SageMaker addresses this with its MLOps tools, particularly SageMaker Pipelines and Model Registry.

SageMaker Pipelines for CI/CD in ML

SageMaker Pipelines is a fully managed service that allows you to create, automate, and manage ML workflows. It’s essentially CI/CD for machine learning, enabling teams to version models, run tests, and deploy updates systematically.

- Define pipelines using Python SDK.

- Trigger pipelines based on events (e.g., new data arrival).

- Integrate with AWS CodePipeline for enterprise DevOps.

This ensures consistency across development, testing, and production environments, reducing errors and improving deployment speed.

Model Registry and Governance

The SageMaker Model Registry acts as a centralized repository for models. Each model can be tagged with metadata, associated with experiments, and approved for production through a formal workflow.

- Supports model versioning and lineage tracking.

- Enables approval workflows for compliance (e.g., HIPAA, GDPR).

- Integrates with SageMaker Experiments to track performance over time.

This level of governance is critical for regulated industries like healthcare and finance, where model transparency and auditability are mandatory.

Monitoring and Drift Detection with SageMaker Monitor

Even the best models can degrade over time due to data drift or concept drift. SageMaker Monitor helps detect these issues by continuously analyzing input data and model predictions.

- Creates baseline statistics from training data.

- Compares real-time data against baselines.

- Triggers alerts when anomalies are detected.

By catching drift early, organizations can retrain models proactively, ensuring sustained accuracy and reliability.

Cost Management and Pricing Model of AWS SageMaker

Understanding the cost structure of AWS SageMaker is crucial for effective budgeting and resource optimization. While SageMaker is a powerful tool, its pricing can vary significantly depending on usage patterns, instance types, and features enabled.

Breakdown of SageMaker Pricing Components

SageMaker uses a pay-as-you-go model with separate charges for different components:

- Notebook Instances: Hourly rate based on instance type (e.g., ml.t3.medium, ml.p3.2xlarge).

- Training Jobs: Billed per second for compute time and data processing.

- Hosting/Inference: Charges for real-time endpoints or batch transforms.

- Storage: Model artifacts and logs stored in S3 are billed separately.

For example, a GPU instance used for deep learning training will cost more than a CPU instance, but can reduce training time dramatically.

Cost-Saving Strategies and Best Practices

To optimize costs, AWS recommends several strategies:

- Use Spot Instances for training jobs (up to 90% discount).

- Shut down notebook instances when not in use.

- Leverage Serverless Inference for unpredictable workloads.

- Use SageMaker Savings Plans for predictable usage.

Additionally, monitoring tools like AWS Cost Explorer and SageMaker’s built-in cost tags can help track spending by team, project, or model.

Free Tier and Trial Options

AWS offers a free tier for new users, which includes:

- 250 hours of t2.medium or t3.medium notebook instances per month for 2 months.

- 60 hours of ml.t3.medium for training per month.

- 125 hours of ml.t2.medium or ml.t3.medium for hosting.

This allows developers and startups to experiment with SageMaker at no cost before scaling up.

Security, Compliance, and Access Control in AWS SageMaker

Security is a top priority when dealing with sensitive data and models. AWS SageMaker provides robust security features that align with enterprise-grade requirements and regulatory standards.

IAM Roles and Fine-Grained Access Control

SageMaker integrates with AWS Identity and Access Management (IAM) to enforce least-privilege access. You can define roles that specify exactly which actions a user or service can perform.

- Restrict access to specific S3 buckets.

- Control who can create or delete endpoints.

- Audit actions via AWS CloudTrail.

For example, a data scientist might have read-only access to production data, while a DevOps engineer can deploy models but not modify training scripts.

Data Encryption and VPC Isolation

All data in SageMaker is encrypted by default—both at rest and in transit. You can also deploy SageMaker resources inside a Virtual Private Cloud (VPC) to isolate them from the public internet.

- Use AWS KMS keys for encryption.

- Enable VPC endpoints to securely access S3 without going through the public internet.

- Apply security groups and network ACLs to control traffic.

This is especially important for organizations handling personally identifiable information (PII) or financial data.

Compliance with Industry Standards

AWS SageMaker complies with major regulatory frameworks, including:

- GDPR (General Data Protection Regulation)

- HIPAA (Health Insurance Portability and Accountability Act)

- SOC 1, SOC 2, SOC 3

- PCI DSS (for payment processing)

This makes it suitable for use in healthcare, banking, government, and other regulated sectors.

Real-World Use Cases and Industry Applications of AWS SageMaker

AWS SageMaker is not just a theoretical platform—it’s being used by companies across industries to solve real business problems. From fraud detection to personalized recommendations, the applications are vast and impactful.

Fraud Detection in Financial Services

Banks and fintech companies use SageMaker to build models that detect fraudulent transactions in real time. By analyzing patterns in transaction data, these models can flag suspicious activity before it causes damage.

- Train models on historical fraud data.

- Deploy real-time inference endpoints to score transactions.

- Use SageMaker Ground Truth to label new fraud cases for retraining.

One global bank reduced false positives by 40% using a SageMaker-based anomaly detection model.

Personalized Recommendations in E-Commerce

Online retailers leverage SageMaker to power recommendation engines that suggest products based on user behavior. These models analyze browsing history, purchase patterns, and demographic data to deliver personalized experiences.

- Use SageMaker built-in algorithms like Factorization Machines.

- Train models on large-scale customer interaction data.

- Deploy via API to integrate with web and mobile apps.

A leading e-commerce platform increased conversion rates by 22% after implementing a SageMaker-powered recommendation system.

Predictive Maintenance in Manufacturing

Manufacturers use SageMaker to predict equipment failures before they occur. By analyzing sensor data from machines, models can identify early signs of wear and schedule maintenance proactively.

- Ingest IoT data via AWS IoT Core.

- Train time-series forecasting models.

- Trigger alerts via Amazon SNS or Lambda functions.

This approach has helped industrial companies reduce downtime by up to 50% and save millions in maintenance costs.

Getting Started with AWS SageMaker: A Step-by-Step Guide

Ready to dive in? Here’s a practical guide to help you get started with AWS SageMaker, whether you’re a beginner or transitioning from another ML platform.

Setting Up Your AWS Account and IAM Permissions

Before using SageMaker, ensure you have an AWS account and the necessary permissions. Create an IAM role with the AmazonSageMakerFullAccess policy or customize a more restrictive role based on your needs.

- Go to the IAM console.

- Create a new role for SageMaker.

- Attach policies like S3 read/write, KMS, and CloudWatch.

This role will be assumed by SageMaker to access other AWS services securely.

Launching Your First Notebook Instance

1. Open the SageMaker console.

2. Click “Notebook instances” > “Create notebook instance”.

3. Choose an instance type (start with ml.t3.medium).

4. Attach the IAM role you created.

5. Launch the instance and open Jupyter.

Once launched, you can upload a notebook or clone one from GitHub to start experimenting.

Training and Deploying a Sample Model

Try the built-in XGBoost algorithm to predict customer churn:

- Upload a CSV dataset to S3.

- Create a training job using the XGBoost container.

- Deploy the trained model to a real-time endpoint.

- Test predictions using the AWS SDK or console.

AWS provides sample notebooks in the SageMaker GitHub repository to help you get started quickly.

Future of AWS SageMaker and Emerging Trends

AWS SageMaker continues to evolve rapidly, introducing new features that push the boundaries of what’s possible in machine learning. As AI becomes more accessible, SageMaker is positioning itself as the go-to platform for both innovation and enterprise adoption.

Integration with Generative AI and SageMaker JumpStart

SageMaker JumpStart is a marketplace-like interface that provides pre-trained models, including state-of-the-art foundation models for natural language processing and computer vision.

- Access models like GPT-2, BERT, and Stable Diffusion.

- Fine-tune models on your data with minimal code.

- Deploy generative AI applications securely within your VPC.

This lowers the barrier to entry for generative AI, allowing businesses to experiment without massive computational resources.

Edge Machine Learning with SageMaker Neo and Greengrass

For applications that require low-latency inference on devices (e.g., cameras, robots), SageMaker Neo compiles models to run efficiently on edge hardware. Combined with AWS Greengrass, this enables intelligent IoT applications that work offline.

- Optimize models for specific hardware (e.g., NVIDIA Jetson, Raspberry Pi).

- Reduce model size and improve inference speed.

- Deploy updates over-the-air via Greengrass.

Democratizing AI with AutoML and Low-Code Tools

AWS is investing heavily in low-code and no-code solutions. SageMaker Canvas, for example, allows business analysts to generate predictions without writing code, using a point-and-click interface.

- Connect to data sources like S3 or Redshift.

- Build models with automated ML.

- Export predictions to Excel or BI tools.

This expansion makes machine learning accessible to non-technical users, accelerating AI adoption across organizations.

What is AWS SageMaker used for?

AWS SageMaker is used to build, train, and deploy machine learning models at scale. It supports the entire ML lifecycle, from data preparation to model deployment and monitoring, making it ideal for developers, data scientists, and enterprises looking to implement AI solutions efficiently.

Is AWS SageMaker free to use?

AWS SageMaker offers a free tier for new users, including 250 hours of notebook instances and 60 hours of training per month for two months. Beyond that, it operates on a pay-as-you-go pricing model based on usage of compute, storage, and inference resources.

How does SageMaker compare to Google AI Platform or Azure ML?

SageMaker is often praised for its deep integration with the AWS ecosystem, extensive feature set, and scalability. While Google AI Platform and Azure ML offer similar capabilities, SageMaker stands out with tools like SageMaker Studio, JumpStart, and robust MLOps support, making it a preferred choice for AWS-centric organizations.

Can I use my own ML models in SageMaker?

Yes, you can bring your own models to SageMaker using custom Docker containers. This allows full control over the training and inference environment, supporting any framework or dependency you need.

Does SageMaker support real-time inference?

Absolutely. SageMaker supports real-time inference through hosted endpoints that provide low-latency predictions. It also offers serverless inference for variable workloads and batch transform for large-scale offline processing.

AWS SageMaker has redefined how organizations approach machine learning by offering a unified, scalable, and secure platform. From its intuitive studio environment to advanced MLOps capabilities, it empowers teams to innovate faster and deploy models with confidence. Whether you’re just starting out or scaling AI across the enterprise, SageMaker provides the tools, flexibility, and support needed to succeed in today’s data-driven world.

Recommended for you 👇

Further Reading: