AWS Glue: 7 Powerful Features You Must Know in 2024

Ever felt overwhelmed by messy data scattered across systems? AWS Glue might just be your ultimate data superhero. This fully managed ETL service simplifies how you prepare and load data for analytics—no servers to manage, no infrastructure headaches.

What Is AWS Glue and Why It Matters

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easier to move data between different data stores. It’s designed to automate much of the heavy lifting involved in data preparation, making it a go-to solution for data engineers and analysts alike.

Core Definition and Purpose

AWS Glue enables users to create, run, and monitor ETL pipelines with minimal manual intervention. It automatically discovers data through its crawler, catalogs it in a central repository, and generates code to transform it. This means you spend less time writing boilerplate code and more time analyzing insights.

- Automates schema discovery and data cataloging

- Generates Python or Scala code for ETL jobs

- Integrates seamlessly with other AWS services like S3, Redshift, and RDS

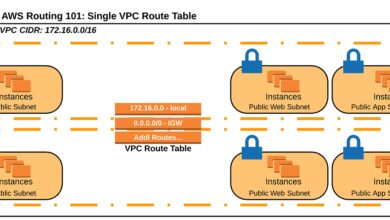

How AWS Glue Fits Into the Data Ecosystem

In modern data architectures, raw data often resides in silos—S3 buckets, databases, streaming platforms. AWS Glue acts as the connective tissue, transforming unstructured or semi-structured data into a format suitable for analytics. Whether you’re building a data lake or a data warehouse, AWS Glue streamlines the ingestion process.

“AWS Glue reduces the time to build ETL pipelines from weeks to hours.” — AWS Official Documentation

Key Components of AWS Glue

To understand how AWS Glue works, you need to know its core building blocks. Each component plays a specific role in the ETL workflow, from discovery to transformation and scheduling.

Data Catalog and Crawlers

The AWS Glue Data Catalog is a persistent metadata store that acts like a centralized repository for table definitions, schemas, and partition information. Crawlers scan your data sources (like Amazon S3, JDBC databases, or DynamoDB), infer schemas, and populate the catalog automatically.

- Crawlers support multiple formats: JSON, CSV, Parquet, ORC, Avro

- Can schedule crawlers to run periodically for up-to-date metadata

- Enables schema versioning and change tracking

For example, if you have logs stored in S3 in JSON format, a crawler can detect the structure, create a table in the Data Catalog, and make it queryable via Amazon Athena or Redshift Spectrum.

ETL Jobs and Script Generation

Once data is cataloged, AWS Glue allows you to create ETL jobs that transform and load the data. You can either let AWS Glue auto-generate Python or Scala scripts or write custom logic. These jobs run on a serverless Apache Spark environment, so there’s no cluster management required.

- Supports both batch and incremental processing

- Auto-generated scripts are editable for customization

- Jobs can be triggered on-demand or via events (e.g., new file in S3)

You can add transformations like filtering, joining, aggregating, or enriching data using built-in Glue transforms or custom Spark code.

Triggers and Workflows

Orchestrating ETL pipelines is crucial for complex workflows. AWS Glue Triggers allow you to automate job execution based on schedules or events. Workflows provide a visual way to chain crawlers, jobs, and triggers into a single, manageable pipeline.

- Supports sequential, concurrent, and conditional execution

- Enables dependency management between components

- Provides monitoring and error handling at the workflow level

For instance, a workflow might start with a crawler to detect new data, trigger an ETL job to clean it, and then activate a Redshift load job—all without manual intervention.

How AWS Glue Simplifies ETL Processes

Traditional ETL tools require significant setup, maintenance, and coding. AWS Glue changes the game by offering a serverless, code-first (but code-optional) approach that accelerates development and reduces operational overhead.

Serverless Architecture Benefits

One of the biggest advantages of AWS Glue is its serverless nature. You don’t need to provision or manage clusters. AWS handles resource allocation, scaling, and patching automatically.

- No need to manage EC2 instances or EMR clusters

- Pay only for the compute time your jobs consume

- Automatic scaling based on data volume

This makes AWS Glue ideal for organizations without dedicated DevOps teams or those looking to reduce infrastructure costs.

Code Generation and Customization

AWS Glue can generate ETL scripts in Python (PySpark) or Scala (Spark) based on your source and target data. While the generated code is functional, it’s fully editable, allowing data engineers to add complex logic like machine learning integration or advanced filtering.

- Reduces boilerplate coding effort

- Supports custom libraries and dependencies

- Enables version control via integration with Git through AWS CodeCommit

For example, you can enhance an auto-generated job to include anomaly detection using a pre-trained model from Amazon SageMaker.

Integration with AWS Analytics Services

AWS Glue integrates natively with key AWS analytics services, making it a cornerstone of the AWS data platform.

- Amazon Athena: Query data directly from S3 using SQL, powered by the Glue Data Catalog

- Amazon Redshift: Load transformed data into Redshift for high-performance analytics

- Amazon EMR: Use Glue as a metadata source for EMR jobs

- Amazon Kinesis: Process streaming data with Glue Streaming ETL jobs

This tight integration reduces data movement and improves consistency across tools.

Use Cases for AWS Glue in Real-World Scenarios

AWS Glue isn’t just a theoretical tool—it’s being used across industries to solve real data challenges. From retail to healthcare, organizations leverage AWS Glue to unify, clean, and analyze data at scale.

Data Lake Construction and Management

Building a data lake on Amazon S3 is a common use case. AWS Glue helps catalog data from various sources, enforce schema standards, and transform raw data into optimized formats like Parquet or ORC for faster querying.

- Automatically detects schema changes in incoming data

- Enforces schema evolution policies

- Enables fine-grained access control via Lake Formation

For example, a media company might use AWS Glue to ingest user engagement logs, catalog them, and transform them into a columnar format for cost-efficient analytics.

Database Migration and Modernization

When migrating from on-premises databases to the cloud, AWS Glue can extract data from legacy systems (via JDBC), transform it to meet modern schema requirements, and load it into Amazon RDS, Aurora, or Redshift.

- Supports heterogeneous migrations (e.g., Oracle to Redshift)

- Handles data type conversions and cleansing

- Can run continuously for near-real-time replication

A financial institution might use AWS Glue to migrate customer transaction data while applying GDPR-compliant masking rules during transformation.

Streaming Data Processing

With AWS Glue Streaming ETL, you can process data from Amazon Kinesis or MSK (Managed Streaming for Kafka) in near real time. This is ideal for use cases like fraud detection, IoT telemetry, or live dashboards.

- Processes data in micro-batches (seconds to minutes)

- Supports stateful operations like windowing and sessionization

- Integrates with Amazon CloudWatch for monitoring

A logistics company could use streaming ETL to track shipment locations and trigger alerts for delays.

Performance Optimization Tips for AWS Glue

While AWS Glue is designed to be efficient, poorly configured jobs can lead to high costs or slow performance. Here are proven strategies to optimize your ETL pipelines.

Job Parameters and Worker Types

AWS Glue offers different worker types (G.1X, G.2X) with varying CPU, memory, and Apache Spark executor configurations. Choosing the right type impacts both performance and cost.

- Use G.1X for lightweight jobs (small datasets, simple transforms)

- Use G.2X for memory-intensive operations (joins, aggregations)

- Adjust the number of workers based on data size and SLA requirements

You can also enable job bookmarks to process only new data, avoiding reprocessing the entire dataset.

Partitioning and Compression

How you store data in S3 significantly affects Glue job performance. Partitioning by date, region, or category allows Glue to read only relevant subsets. Using columnar formats like Parquet with compression (e.g., Snappy, GZIP) reduces I/O and speeds up queries.

- Partition large datasets to minimize scan volume

- Convert CSV/JSON to Parquet for better performance

- Use partition predicates in your ETL logic to filter early

For example, instead of scanning 1TB of logs, partitioning by date lets you process just one day’s data.

Monitoring and Debugging with CloudWatch

AWS Glue integrates with Amazon CloudWatch to provide logs, metrics, and alarms. Monitoring key metrics like shuffle spill, executor memory usage, and job duration helps identify bottlenecks.

- Set up CloudWatch alarms for job failures or long runtimes

- Analyze logs in CloudWatch Logs to debug transformation errors

- Use Glue’s built-in job metrics to track data input/output volume

You can also enable continuous logging to S3 for long-term analysis and compliance.

Security and Compliance in AWS Glue

Data security is non-negotiable. AWS Glue provides robust mechanisms to protect data at rest, in transit, and during processing.

Encryption and Access Control

AWS Glue supports encryption for both data and scripts. You can enable AWS KMS (Key Management Service) to encrypt job bookmarks, temporary directories, and ETL scripts.

- Encrypt data in S3 using SSE-S3 or SSE-KMS

- Enable SSL/TLS for JDBC connections

- Use IAM roles to control access to Glue resources

For example, you can restrict Glue jobs to only access specific S3 prefixes using IAM policies.

Data Masking and Anonymization

In regulated industries, personally identifiable information (PII) must be protected. AWS Glue allows you to apply masking, hashing, or tokenization during ETL.

- Use built-in transforms like

DropNullFieldsorApplyMappingto remove sensitive columns - Integrate with AWS Lambda for custom anonymization logic

- Leverage AWS Lake Formation for fine-grained row/column-level security

A healthcare provider might use Glue to redact patient names while preserving diagnostic codes for analysis.

Compliance with GDPR, HIPAA, and SOC

AWS Glue is compliant with major regulatory frameworks. When configured correctly, it supports GDPR (data minimization), HIPAA (protected health information), and SOC 2 (audit logging).

- Enable AWS CloudTrail to log all Glue API calls

- Use VPC endpoints to keep traffic within your private network

- Regularly audit IAM policies and job configurations

For more details, refer to the AWS Compliance Programs page.

Advanced Features and Future Trends in AWS Glue

AWS Glue continues to evolve with new features that enhance scalability, usability, and integration. Staying updated ensures you leverage the full potential of the service.

Glue Studio: Visual ETL Development

AWS Glue Studio provides a visual interface to create, run, and monitor ETL jobs without writing code. It’s ideal for analysts or less technical users who want to build pipelines using drag-and-drop components.

- Drag-and-drop source, transform, and target nodes

- Real-time job monitoring and debugging

- Export jobs to scripts for version control

While Glue Studio simplifies development, it still runs on the same serverless Spark backend, ensuring performance and scalability.

Machine Learning Integration with Glue ML

AWS Glue includes built-in machine learning capabilities like FindMatches, which helps deduplicate and match records across datasets. For example, it can identify that “John Doe” and “J. Doe” refer to the same customer.

- Trains custom matching models with minimal labeled data

- Reduces manual data cleansing effort

- Improves data quality for analytics and reporting

This feature is particularly useful for CRM integration or customer 360 initiatives.

Serverless Spark and Future Roadmap

AWS is investing heavily in serverless data processing. Future enhancements may include tighter integration with Amazon EMR Serverless, improved streaming capabilities, and AI-driven job optimization.

- Potential for auto-tuning job parameters based on historical performance

- Enhanced support for Delta Lake and Apache Iceberg

- Better cost visibility and budgeting tools

Stay updated via the AWS Glue Features page.

Common Challenges and How to Overcome Them

Even with its advantages, AWS Glue can present challenges. Understanding these pitfalls helps you design more resilient pipelines.

Cost Management and Unexpected Spikes

Since AWS Glue charges based on DPU (Data Processing Unit) hours, long-running or inefficient jobs can lead to high costs. To mitigate this:

- Use job bookmarks to avoid reprocessing

- Optimize scripts to reduce shuffle operations

- Set up billing alerts in AWS Budgets

Monitor DPU usage in CloudWatch and optimize job concurrency.

Schema Evolution and Data Drift

When source data changes (e.g., new columns, missing fields), Glue jobs may fail. To handle schema drift:

- Enable schema change detection in crawlers

- Use Glue’s schema registry for AVRO and JSON

- Implement error handling in scripts (e.g., try-catch blocks)

Consider using AWS Glue Schema Registry to enforce compatibility policies (backward, forward, full).

Error Handling and Retry Mechanisms

ETL jobs can fail due to network issues, data quality problems, or resource limits. Implementing robust error handling is critical.

- Use retry strategies with exponential backoff

- Route failed records to a dead-letter queue (DLQ) in S3

- Send failure notifications via Amazon SNS

You can also use AWS Step Functions to build state machines for complex error recovery workflows.

What is AWS Glue used for?

AWS Glue is used for automating ETL (extract, transform, load) processes. It helps discover, catalog, clean, and transform data from various sources into formats suitable for analytics, data lakes, and data warehouses.

Is AWS Glue serverless?

Yes, AWS Glue is a fully serverless service. It automatically provisions and scales Apache Spark environments for ETL jobs, so you don’t need to manage clusters or infrastructure.

How much does AWS Glue cost?

AWS Glue pricing is based on DPU (Data Processing Unit) hours for ETL jobs, crawler runtime, and Data Catalog usage. There’s no upfront cost, and you pay only for what you use. Check the official pricing page for details.

Can AWS Glue handle streaming data?

Yes, AWS Glue supports streaming ETL jobs that process data from Amazon Kinesis or MSK in near real time, enabling use cases like fraud detection and live analytics.

How does AWS Glue compare to Apache Airflow?

AWS Glue is focused on ETL automation with built-in code generation and cataloging, while Apache Airflow (or MWAA on AWS) is a workflow orchestration tool. They can be used together—Glue for transformation, Airflow for scheduling complex pipelines.

AWS Glue is a powerful, serverless ETL service that simplifies data integration across diverse sources. From automatic schema discovery to streaming processing and ML-powered deduplication, it offers a comprehensive toolkit for modern data teams. By leveraging its features wisely—optimizing performance, securing data, and managing costs—you can build scalable, reliable pipelines that drive business insights. As AWS continues to enhance Glue with serverless innovations and AI capabilities, its role in the cloud data ecosystem will only grow stronger.

Recommended for you 👇

Further Reading: