AWS CLI: 7 Powerful Ways to Master Cloud Control

Want to control your AWS resources like a pro? The AWS CLI gives you fast, scriptable access to Amazon’s cloud—no GUI needed. Discover how to install, configure, and supercharge your workflow with real-world commands and expert tips.

What Is AWS CLI and Why It’s a Game-Changer

The AWS Command Line Interface (CLI) is a powerful tool that allows developers, system administrators, and DevOps engineers to interact with Amazon Web Services directly from a terminal or script. Instead of navigating through the AWS Management Console, you can use simple commands to manage EC2 instances, S3 buckets, Lambda functions, and hundreds of other services.

Developed and maintained by Amazon, the AWS CLI is built on top of the AWS SDK for Python (boto3), which means it supports nearly all AWS services and operations available via APIs. This makes it not just convenient, but essential for automation, infrastructure-as-code practices, and large-scale deployments.

Core Features of AWS CLI

The AWS CLI stands out due to its robust feature set designed for efficiency and scalability. Some of its most important features include:

- Unified Interface: One tool to manage all AWS services—no need to switch between different consoles or SDKs.

- Scriptability: Easily integrate AWS commands into shell scripts, CI/CD pipelines, or automation workflows.

- Output Formatting: Supports multiple output formats like JSON, text, and table, making it easier to parse results programmatically.

- Auto-Pagination: Automatically retrieves all results across multiple API calls when dealing with large datasets.

- Interactive Mode: Offers an auto-completing, guided shell experience for faster command discovery.

These capabilities make the AWS CLI indispensable for anyone serious about managing AWS at scale. Whether you’re launching a single EC2 instance or orchestrating a multi-region deployment, the CLI streamlines the process.

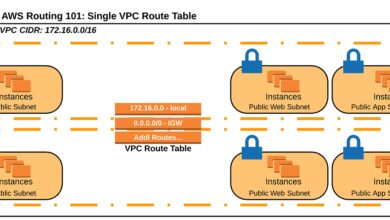

How AWS CLI Compares to Other Tools

While the AWS Management Console provides a user-friendly graphical interface, it lacks the speed and repeatability required in production environments. In contrast, the AWS CLI offers precision, consistency, and the ability to version-control your infrastructure changes.

Compared to using SDKs in programming languages like Python or JavaScript, the CLI is lighter and doesn’t require writing full applications. It’s ideal for quick tasks, debugging, and integration into existing shell environments.

Tools like Terraform or AWS CloudFormation are better suited for declarative infrastructure management, but the AWS CLI complements them perfectly during development and troubleshooting phases.

“The AWS CLI is the Swiss Army knife of cloud administration—simple, sharp, and always ready.”

Installing AWS CLI: Step-by-Step Guide

Before you can start using the AWS CLI, you need to install it on your machine. The installation process varies slightly depending on your operating system, but Amazon provides detailed instructions for all major platforms.

Installation on Windows

For Windows users, the easiest way to install AWS CLI is via the MSI installer provided by AWS. Here’s how:

- Download the AWS CLI MSI installer from the official AWS website.

- Run the downloaded file and follow the installation wizard.

- Once installed, open Command Prompt or PowerShell and type

aws --versionto verify the installation.

You can also use package managers like Chocolatey for automated installations:

choco install awscliThis method is especially useful in enterprise environments where consistent tooling across machines is critical.

Installation on macOS

macOS users have several options for installing the AWS CLI. The recommended approach is using pip, Python’s package manager:

pip3 install awscli --upgrade --userAlternatively, you can use Homebrew, a popular macOS package manager:

brew install awscliAfter installation, reload your shell profile or restart the terminal, then run aws --version to confirm everything works correctly.

Installation on Linux

Most Linux distributions support installing AWS CLI via pip or through bundled installers. For systems with Python 3 and pip already installed:

pip3 install awscli --upgrade --userIf you prefer a standalone installer (especially useful in environments without Python), AWS provides a bundled CLI installer:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/installThis method ensures you get the latest version directly from AWS, avoiding potential conflicts with system packages.

Configuring AWS CLI with IAM Credentials

Once installed, the next step is configuring the AWS CLI with proper credentials so it can authenticate your requests to AWS services. This is done using AWS Identity and Access Management (IAM) credentials.

Setting Up AWS Access Keys

To configure the CLI, you’ll need an access key ID and secret access key associated with an IAM user. Here’s how to generate them:

- Log in to the AWS Management Console.

- Navigate to IAM > Users > Select your user.

- Go to the Security credentials tab.

- Under Access keys, click Create access key.

- Download the CSV file containing the key pair and store it securely.

Never share or commit these keys to version control—they are equivalent to passwords.

Running aws configure

With your access keys ready, run the following command in your terminal:

aws configureYou’ll be prompted to enter:

- AWS Access Key ID

- AWS Secret Access Key

- Default region name (e.g.,

us-east-1) - Default output format (e.g.,

json)

This creates a configuration file at ~/.aws/credentials and ~/.aws/config, which the CLI uses for authentication and defaults.

Pro Tip: Use IAM roles instead of long-term access keys in production environments to enhance security.

Using Named Profiles for Multiple Accounts

If you manage multiple AWS accounts (e.g., dev, staging, prod), you can create named profiles to switch between them easily:

aws configure --profile devThis creates a profile called ‘dev’. You can then use it by appending --profile dev to any command:

aws s3 ls --profile devYou can set a default profile by exporting the AWS_PROFILE environment variable:

export AWS_PROFILE=devThis avoids having to specify the profile repeatedly.

Mastering Basic AWS CLI Commands

Now that the AWS CLI is installed and configured, it’s time to explore some fundamental commands. These are the building blocks for interacting with AWS services and will form the core of your daily operations.

Navigating S3 with aws s3 Commands

Amazon S3 is one of the most widely used services, and the AWS CLI provides a dedicated set of high-level commands for managing buckets and objects.

To list all S3 buckets:

aws s3 lsTo upload a file to a bucket:

aws s3 cp local-file.txt s3://my-bucket/path/To sync an entire directory:

aws s3 sync ./local-folder s3://my-bucket/backup/The sync command is particularly powerful—it only transfers files that have changed, making it ideal for backups and deployments.

Managing EC2 Instances via CLI

Amazon EC2 allows you to launch and manage virtual servers in the cloud. With the AWS CLI, you can automate instance lifecycle management.

To launch a new EC2 instance:

aws ec2 run-instances --image-id ami-0abcdef1234567890 --instance-type t3.micro --key-name my-key-pair --security-group-ids sg-903004f8 --subnet-id subnet-6e7f829eTo stop an instance:

aws ec2 stop-instances --instance-ids i-1234567890abcdef0To describe running instances:

aws ec2 describe-instances --filters "Name=instance-state-name,Values=running"These commands give you full control over your compute resources without touching the console.

Working with IAM Policies and Users

You can also manage IAM entities directly from the CLI. For example, to create a new IAM user:

aws iam create-user --user-name john-doeTo attach a managed policy:

aws iam attach-user-policy --user-name john-doe --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccessTo list all policies attached to a user:

aws iam list-attached-user-policies --user-name john-doeThis level of control enables automation of user provisioning and permission audits.

Advanced AWS CLI Techniques for Power Users

Once you’re comfortable with the basics, you can unlock even more power with advanced features like query filtering, scripting, and integration with other tools.

Using –query for JSON Filtering

The --query parameter allows you to filter and extract specific data from AWS CLI responses using JMESPath, a powerful query language for JSON.

For example, to get only the instance IDs and types of running EC2 instances:

aws ec2 describe-instances --query 'Reservations[*].Instances[*].[InstanceId,InstanceType]' --output tableTo filter instances by state:

aws ec2 describe-instances --query 'Reservations[*].Instances[?State.Name==`running`].{ID:InstanceId,Type:InstanceType,IP:PublicIpAddress}' --output jsonThis reduces clutter and makes parsing output much easier, especially in scripts.

Scripting AWS CLI Commands in Bash

You can embed AWS CLI commands in shell scripts to automate repetitive tasks. For example, a backup script might look like this:

#!/bin/bash

DATABASE_FILE="/backups/db-$(date +%Y%m%d).sql"

mysqldump -u root mydb > $DATABASE_FILE

aws s3 cp $DATABASE_FILE s3://my-backup-bucket/

echo "Backup uploaded successfully at $(date)"Save this as backup.sh, make it executable with chmod +x backup.sh, and schedule it with cron for daily backups.

Combining AWS CLI with jq and Other Tools

For even more advanced data manipulation, combine AWS CLI with external tools like jq, a lightweight JSON processor.

For example, to extract public IPs of all running instances:

aws ec2 describe-instances --query 'Reservations[*].Instances[?State.Name==`running`].PublicIpAddress' --output json | jq -r '.[] | .[]'This pipeline filters the JSON response and prints each IP on a new line—perfect for feeding into SSH loops or monitoring systems.

Best Practices for Secure and Efficient AWS CLI Usage

Using the AWS CLI effectively isn’t just about knowing commands—it’s also about following security and operational best practices.

Use IAM Roles Instead of Long-Term Keys

Long-term access keys pose a security risk if leaked. Whenever possible, use IAM roles with temporary credentials, especially on EC2 instances or in CI/CD pipelines.

When you assign an IAM role to an EC2 instance, the AWS CLI automatically retrieves temporary credentials from the instance metadata service—no manual configuration needed.

Enable Logging and Monitoring

Enable AWS CloudTrail to log all CLI actions. This provides an audit trail of who did what and when, which is crucial for compliance and incident response.

You can also use Amazon CloudWatch to monitor CLI-driven automation scripts and trigger alerts if something goes wrong.

Version Control Your Scripts

Treat your AWS CLI scripts like code. Store them in a Git repository, add comments, and review changes before deployment. This improves collaboration and reduces the risk of accidental misconfigurations.

Security First: Always follow the principle of least privilege—grant only the permissions necessary for a task.

Troubleshooting Common AWS CLI Issues

Even experienced users run into issues with the AWS CLI. Knowing how to diagnose and fix common problems can save hours of frustration.

Handling Authentication Errors

If you see errors like Unable to locate credentials or Invalid access key, check the following:

- Run

aws configure listto verify current credentials. - Ensure the

~/.aws/credentialsfile exists and has correct permissions. - Check for typos in access keys or region names.

- If using profiles, confirm the correct profile is being used.

You can also set environment variables temporarily:

export AWS_ACCESS_KEY_ID=AKIAIOSFODNN7EXAMPLE

export AWS_SECRET_ACCESS_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

export AWS_DEFAULT_REGION=us-east-1Resolving Region and Endpoint Errors

If a service isn’t available in your default region, you’ll get an error like Invalid endpoint. Always double-check the region using:

aws configure get regionYou can override it per command:

aws s3 ls --region us-west-2Also, ensure the service is available in that region—some services like AWS WAF are region-specific.

Debugging with –debug Flag

The --debug flag provides detailed logs of every API call, including HTTP requests and responses:

aws s3 ls --debugThis is invaluable for understanding authentication flows, endpoint resolution, and error causes. Be cautious though—it may expose sensitive data in logs.

Integrating AWS CLI into DevOps Workflows

The AWS CLI is a cornerstone of modern DevOps practices. Its ability to automate cloud operations makes it a perfect fit for CI/CD pipelines, infrastructure provisioning, and monitoring.

Using AWS CLI in CI/CD Pipelines

In platforms like Jenkins, GitHub Actions, or GitLab CI, you can use the AWS CLI to deploy applications, push Docker images, or update Lambda functions.

Example GitHub Actions step:

- name: Deploy to S3

run: aws s3 sync build/ s3://my-website-bucket/

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_DEFAULT_REGION: us-east-1This securely injects credentials and deploys static assets after a build.

Automating Infrastructure with CLI and CloudFormation

You can use the AWS CLI to deploy and manage CloudFormation stacks:

aws cloudformation create-stack --stack-name my-stack --template-body file://template.yaml --parameters ParameterKey=InstanceType,ParameterValue=t3.microThis enables infrastructure-as-code workflows where templates are version-controlled and deployed consistently.

Monitoring and Alerting with CLI Scripts

Create custom monitoring scripts that check resource states and send alerts. For example, a script that checks unattached EBS volumes:

aws ec2 describe-volumes --filters Name=status,Values=available --query 'Volumes[*].VolumeId' --output textPair this with cron and email alerts to prevent wasted spending.

What is AWS CLI used for?

The AWS CLI is used to manage Amazon Web Services from the command line. It allows users to perform actions like launching EC2 instances, managing S3 buckets, configuring IAM roles, and automating cloud operations through scripts and integrations.

How do I install AWS CLI on Linux?

You can install AWS CLI on Linux using pip: pip3 install awscli --upgrade --user, or via the bundled installer from AWS’s official site using curl and unzip. Verify with aws --version.

Can I use AWS CLI with multiple accounts?

Yes, you can use named profiles with aws configure --profile profile-name to manage multiple AWS accounts. Switch between them using --profile profile-name or set AWS_PROFILE environment variable.

How do I filter AWS CLI output?

Use the --query parameter with JMESPath expressions to filter JSON output. For example: aws ec2 describe-instances --query 'Reservations[*].Instances[?State.Name==`running`].InstanceId'.

Is AWS CLI secure?

Yes, when used properly. Always use IAM roles with temporary credentials instead of long-term access keys, enable CloudTrail logging, and follow the principle of least privilege to ensure secure usage.

The AWS CLI is far more than just a command-line tool—it’s a gateway to full control over your AWS environment. From simple file uploads to complex automation workflows, mastering the AWS CLI empowers you to work faster, smarter, and more securely. Whether you’re a developer, DevOps engineer, or cloud architect, investing time in learning the CLI will pay dividends in productivity and operational excellence. Start small, experiment often, and gradually build up your automation toolkit.

Recommended for you 👇

Further Reading: